Content quality is a significant factor in search engine rankings and user engagement. Identifying pages with low-quality or thin content can help you enhance your website’s value, improve SEO, and provide a better experience for your visitors. In this guide, I will show you how to use Screaming Frog’s Custom JavaScript feature and OpenAI’s API to automatically assess the quality of your content.

Manually auditing website content for quality issues is a daunting and time-consuming task. Sifting through each page to assess originality, relevance, and value can be overwhelming, especially for large websites with hundreds or thousands of pages.

The challenge intensifies when trying to detect AI-generated content, which can undermine the uniqueness and authenticity of your site. These pain points highlight the need for an automated solution that efficiently identifies low-quality, thin, or AI-written content, enabling you to focus your efforts on enhancing your website’s overall quality.

This guide will show you how to use Screaming Frog’s Custom JavaScript feature and OpenAI’s API to automate this process, saving you time and giving you a comprehensive content audit that you can easily action.

What you’ll need:

- Screaming Frog SEO Spider: Version 19.0 or later.

- OpenAI API Key: Access to OpenAI’s GPT-4 or GPT-3.5-turbo model via API.

- Microsoft Excel: For data analysis.

Note: You must have an active OpenAI API key. Sign up at OpenAI’s website if you don’t have one.

Overview of the Process

- Configure Screaming Frog: Set up Screaming Frog to render JavaScript and use a custom JavaScript code snippet.

- Use OpenAI’s API: The custom JavaScript sends page content to OpenAI’s API, which evaluates the content quality.

- Crawl Your Website: Screaming Frog crawls the specified URLs, collecting quality scores and assessments.

- Analyze the Data: Export the crawl data to Excel, extract the scores, and identify pages needing improvement.

Step-by-Step Guide

1. Start Screaming Frog SEO Spider

Launch the Screaming Frog SEO Spider application on your computer.

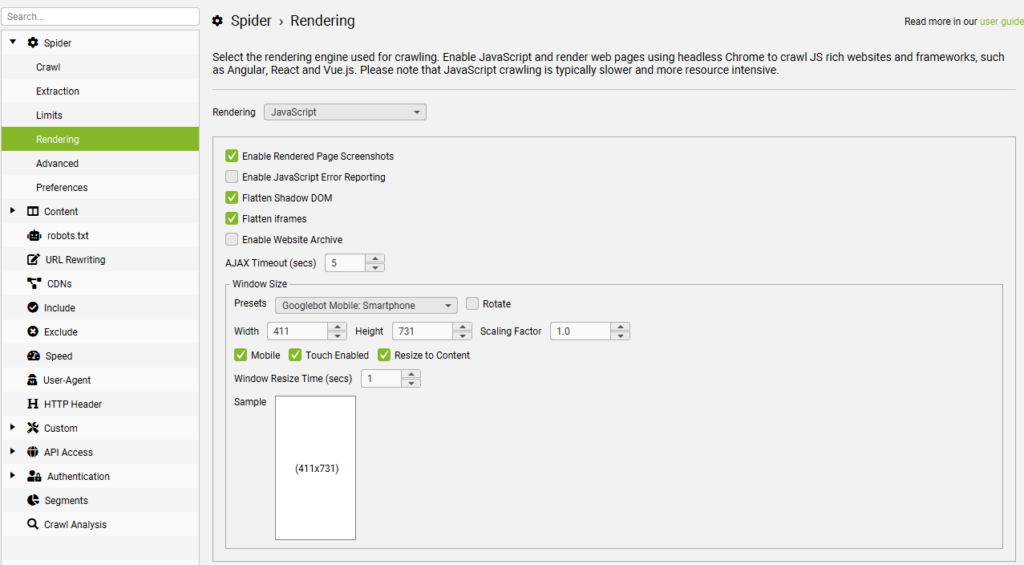

2. Enable JavaScript Rendering

To accurately render dynamic content and execute our script:

- Go to Configuration > Spider.

- Click on the Rendering tab.

- Select JavaScript from the rendering options.

- Click OK.

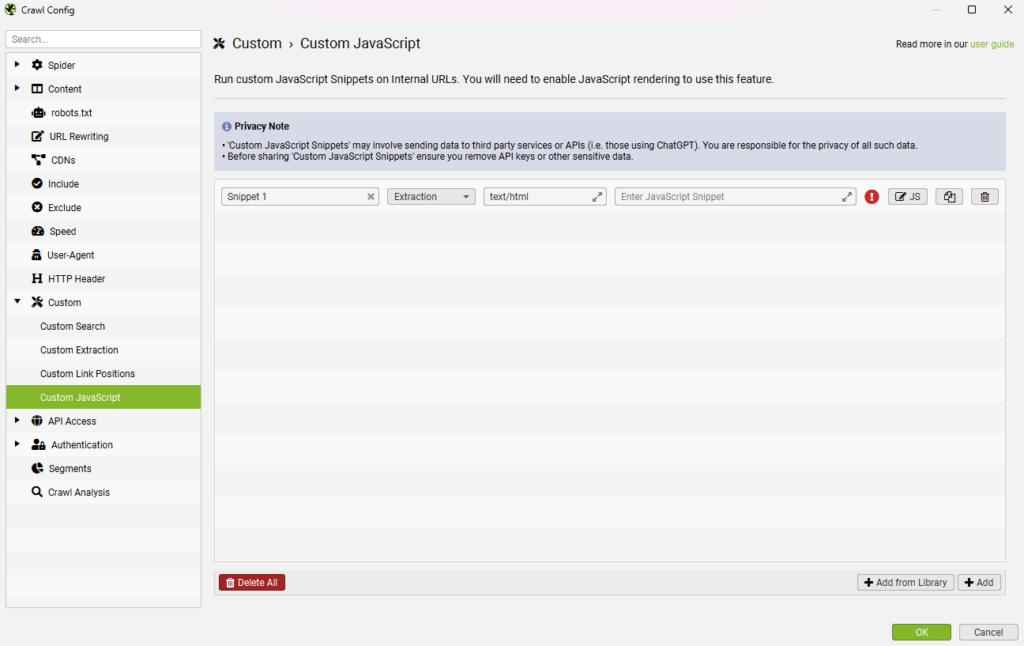

3. Configure Custom JavaScript

Set up the custom JavaScript snippet that communicates with OpenAI’s API:

- Go to Configuration > Custom > JavaScript.

- Click on Add to create a new snippet.

- Click the JS icon to open the JavaScript code editor.

Insert the Custom JavaScript Code

Copy and paste the following code into the script editor:

// Ask ChatGPT about AI detection // // Adjust the value of 'question' on line 26. // Adjust the value of 'userContentList' on line 27, currently set to body text. // Other examples such as page title, meta description, heading h1 or h2 are // shown on line 32 onwards. // // // This script demonstrates how JavaScript Snippets can communicate with // APIs, in this case ChatGPT. // // This script also shows how the Spider will wait for JavaScript Promises to // be fulfilled i.e. the fetch request to the ChatGPT API when fulfilled // will return the data to the Spider. // // IMPORTANT: // You will need to supply your API key below on line 25 which will be stored // as part of your SEO Spider configuration in plain text. Also be mindful if // sharing this script that you will be sharing your API key also unless you // delete it before sharing. // // Also be aware of API limits when crawling large web sites with this snippet. // const OPENAI_API_KEY = 'YOUR_OPENAI_API_KEY'; const question = `**The Score is: %%**\n\n You are an expert in detecting low-quality, thin, and unhelpful content. Start your response with **The Score is: %%** followed by your analysis.\n\n **Assessment:**\n\n Consider the following criteria, citing specific examples from the text to support your analysis:\n\n - **Lack of Originality:** Does the content provide unique insights, personal experiences, or well-researched perspectives, or is it simply rehashing common knowledge?\n\n - **Unnatural Phrasing:** Does the language sound robotic, awkward, or overly formal? Does it lack a natural flow or smooth transitions?\n\n - **Purpose:** Is the content informative, engaging, and relevant for its intended audience and purpose?\n\n - **Value:** Does the text go beyond surface-level observations to offer meaningful information, insights, or analysis?\n\n - **Supporting Evidence:** Are claims supported by credible examples, data, research, or citations? Are the examples relevant and well-explained?\n\n - **Tone:** Is the tone engaging and appropriate for the intended audience? Does it make the text enjoyable to read?\n\n **Reasoning:**\n\n Provide a concise explanation of the reasoning behind your assessment and score.\n\n **Score:**\n\n After your analysis, assign a probability score (0-100%) indicating how likely the content is to be low-quality, thin, or unhelpful:\n\n - **0-20%:** Very unlikely to be low-quality. The text is well-written, informative, and engaging, showing strong signs of quality.\n\n - **21-40%:** Unlikely to be low-quality, though there may be room for improvement.\n\n - **41-60%:** Possibly low-quality, but further analysis may be required.\n\n - **61-80%:** Likely low-quality, with multiple signs of thinness or lack of helpfulness.\n\n - **81-100%:** Very likely low-quality due to numerous factors indicating poor content.`; const userContentList = [document.body.innerText]; // Page Title // const userContentList = [document.title]; // meta description // const userContentList = [document.querySelector('meta[name="description"]')?.getAttribute('content')]; // heading h1 (replace with h2 etc as required) // const userContentList = [...document.querySelectorAll('h1')].map(h => h.textContent); function chatGptRequest(userContent) { return fetch('https://api.openai.com/v1/chat/completions', { method: 'POST', headers: { 'Authorization': `Bearer ${OPENAI_API_KEY}`, "Content-Type": "application/json", }, body: JSON.stringify({ "model": "gpt-4o", "messages": [ { role: "user", content: `${question} ${userContent}` } ], "temperature": 0.7 }) }) .then(response => { if (!response.ok) { return response.text().then(text => {throw new Error(text)}); } return response.json(); }) .then(data => { return data.choices[0].message.content.trim(); }); } return Promise.all(userContentList.map(userContent => { return chatGptRequest(userContent); })) .then(data => seoSpider.data(data)) .catch(error => seoSpider.error(error));Important: Replace ‘YOUR_OPENAI_API_KEY‘ with your actual OpenAI API key. Keep your API key secure and do not share it publicly.

Save the Script

- Give your script a recognizable name, such as “Content Quality Assessment”.

- Click OK to save the script.

4. Import URLs to Audit

You can either crawl your entire website or import a list of specific URLs (the latter is recommended).

To Import URLs:

- Go to Mode > List.

- Click on Upload > Paste.

- Paste your list of URLs into the dialog box.

- Click OK.

5. Start the Crawl

- Click the Start button to begin crawling.

- Screaming Frog will crawl each URL, run the custom JavaScript, and collect the data.

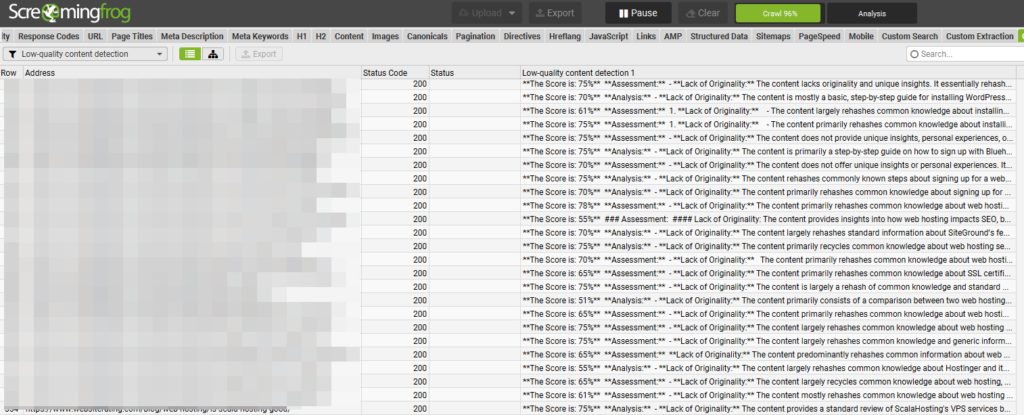

- Go to the Custom JavaScript tab to check the progress of the crawl.

6. Export and Analyze the Results

Export the Data

- Once the crawl is complete, go to the Export button.

- Save the export as an Excel or CSV file.

Open in Excel

- Open the file in Microsoft Excel.

- You’ll see the URLs along with the data returned from the custom JavaScript (i.e., the content assessments).

Understanding the Custom JavaScript Snippet

The custom JavaScript snippet performs the following actions:

- Defines a Prompt: Crafts a detailed prompt instructing the AI to assess content quality based on several criteria.

- Extracts Page Content: Retrieves the inner text from the page’s body (

document.body.innerText).- Optional: You can adjust

userContentListto target specific elements, such as titles or meta descriptions.

- Optional: You can adjust

- Calls OpenAI’s API: Sends a request to the OpenAI API with the prompt and the page content.

- Processes the Response: Receives and returns the AI’s assessment, which includes a score and reasoning.

Key Variables Explained

OPENAI_API_KEY: Your OpenAI API key for authentication.question: The prompt provided to the AI.userContentList: An array containing the content to be analyzed.

Note on Models

- The script uses the “model”: “gpt-4” parameter.

- If you don’t have access to GPT-4, you can change this to “model”: “gpt-4o”, “model”: “gpt-4o-mini” or “model”: “gpt-3.5-turbo”.

Using Excel to Extract Scores

The AI’s response starts with “The Score is: XX%”, where XX is the numerical score.

To extract this score for sorting and filtering:

- Open the crawl in Excel or CSV.

- Insert a New Column: Next to the column containing the AI’s response.

- Use the Custom Excel Formula:

=VALUE(MID(A2,FIND(":",A2)+2,FIND("%",A2)-FIND(":",A2)-2)/100)- Replace A2 with the cell reference containing the AI’s response.

- Drag the Formula: Apply it to all rows containing data.

How the Formula Works

FIND(":", A2)+2: Finds the position after the colon and space.FIND("%", A2): Finds the position of the percentage symbol.MID(...): Extracts the substring containing the score.VALUE(...): Converts the extracted text to a numerical value.

Example

If the AI’s response in cell A2 is:

The Score is: 75% **Assessment:** ... (assessment text) The formula will extract 0.75 as a number.

Wrap Up

By integrating Screaming Frog with OpenAI’s language models, you can automate the process of auditing your website’s content for quality issues. This method enables you to quickly identify pages that may require improvement, ensuring your site maintains high standards for both users and search engines.

Have you tried using this script to audit your website’s content? I’d love to hear about your experiences. Did you customize the script to suit your specific needs or focus on particular content elements? Share your insights and let us know how this approach has helped you enhance your site’s quality.

Remember to use this powerful tool responsibly, considering API usage limits and privacy concerns. Regularly auditing your content can lead to better SEO performance, increased user engagement, and a stronger online presence.

FAQs

1. Is it safe to share my OpenAI API key in the script?

No, your API key is sensitive information. Never share your API key publicly or include it in scripts that others might access. Always keep it secure.

2. What if I don’t have access to GPT-4?

You can modify the script to use GPT-4o, GPT-4o-mini, or GPT-3.5-turbo by changing the model parameter:

"model": "gpt-4o-mini",3. Are there costs associated with using the OpenAI API?

Yes, using the OpenAI API incurs costs based on usage. To save on costs, limit the number of URLs you decide to crawl. Refer to OpenAI’s pricing page for details.

4. How can I adjust the criteria used in the assessment?

You can modify the question variable in the script (const question = ‘xxx’) to include or exclude criteria based on your specific needs.

5. Can I use this method to analyze non-English content?

Yes, OpenAI’s models support multiple languages. However, the effectiveness may vary based on the language and the model used.

6. What are the API rate limits?

API rate limits depend on your OpenAI account and the model used. Check OpenAI’s rate limit guidelines for more information.